Disaster recovery (DR) is always a hot topic that many companies do not do at all for one reason or another or do badly. Providing DR for a virtual environment can be a particularly challenging and expensive endeavor. In my enterprise I am running ESX4 U1 on top of Brocade fiber-channel connected NetApp FAS arrays (1 production, 1 DR) with a 1Gb Metro-Ethernet link between sites. I do not currently have the luxury of using VMware’s Site Recovery Manager (SRM) in my environment so my process will be completely manual. SRM removes many of the monotonous tasks of turning up a virtual DR environment including testing your plan, but this comes with a heavy price tag. I am fortunate enough, however, to have the full suite of NetApp tools at my disposal.

Snap Manager for Virtual Infrastructure (SMVI) is NetApp’s answer to vSphere VM backups for use with their storage arrays. SMVI does require a license and requires some specific array-level licenses, such as: SnapRestore, applicable protocol license (NFS, FCP, iSCSI), SnapMirror (optional), and FlexClone. There are some specific instances in which FlexClone is not required such as for NFS VM in-place VMDK restores. All by itself SMVI can be used instead of VCB or Ranger type products to backup/restore VMs, volumes, or individual files within the guest VM OS. SnapMirror can be used in conjunction with SMVI to provide DR by sending backed up VM volumes offsite to another Filer.

Here is the backup process:

- Once a backup is initiated, a VMware snapshot is created via vCenter for each powered-on VM in a selected volume, or for each VM that is selected for backup. You can choose either method but volume backups are recommended.

- The VM snapshots preserve state and are used during restores. Windows application consistency can be achieved by using VMware’s VSS support for snapshots.

- Once all VMs are snapped, SMVI initiates a snapshot of the volume on the Filer.

- Once the volume snaps are complete, the VM snapshots are deleted in vCenter.

- If you have a SnapMirror in place for your backed up volumes, it is then updated by SMVI.

NetApp fully supports, and recommends, running SMVI on the vCenter server for simplicity. Setup is very straight forward and only requires the vCenter server/ credentials and the storage array names or IPs/ credentials. Best practice is to set up a dedicated user in vCenter as well as on the arrays for SMVI. The required vCenter permissions for this service account are detailed in the best practices guide in the references section at the bottom of this post.

SMVI Setup

Once SMVI can communicate with vCenter, you will see the Vi datacenters, datastores and VMs on the inventory tab.

Backup configuration is simple. Name the job, choose the volume, specify the desired backup schedule, how long to keep the backups, and where to send alerts. If you’ll be using SnapMirror, make sure to check the “Initiate SnapMirror update” option.

By default the SMVI job alerts include a link to the job log but the listed address may be unreachable. In my case the links sent were to an IPv6 address even though IPv6 was disabled on the server. This can be changed by editing the smvi.override file in \Program Files (x86)\NetApp\SMVI\server\etc and adding the following lines:

smvi.hostname=vcenter.domain.com

smvi.address=10.10.10.10

Once you successfully run a backup job in SMVI you will be able to see the available snapshots for the volume on the source Filer. Note the SMVI snaps vs snaps used by the SnapMirror.

I my scenario, I am backing up the 2 LUNS called VMProd1_FC and VMProd2_FC which exist in volumes called ESXLUN1 and 2, respectively. Both of these volumes have corresponding SnapMirrors configured between the primary and DR Filers.

A couple of things to keep in mind about timing:

- It is a good idea to sync your SnapMirrors outside of SMVI which will reduce the time it takes when SMVI updates the mirrors. Just make sure to do this at a time other than when your SMVI jobs are running!

- If you are using deduplication on these volumes (you should be), schedule it to run at a time when you are not running SMVI backups or syncing SnapMirrors.

Once SMVI successfully updates the SnapMirror, you will see the replicated snapshots on the destination side of the mirror as well. DR for your ESX environment is now in effect!

Testing the DR Plan

Here comes the fun part and where having SRM would be extremely helpful to automate most of this. Thanks to NetApp’s FlexClone technology we can test our DR plan without breaking the mirrors, so you could test whenever and as often as necessary without affecting production.

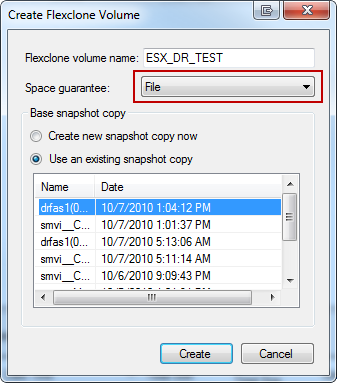

First step, create a FlexClone Volume of the replicated snapshot you want to be able to mount and test with. Choose the appropriate volume then select Snapshop—>Clone from the Volumes menu. Important to note is that you must use a File space guarantee or the FlexClone creation will fail! This can be done via System Manager, CLI, or FilerView:

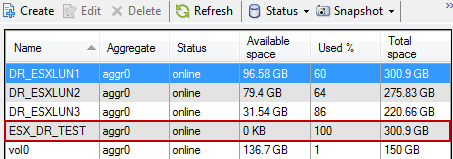

Your new volume will now be visible in the Filer’s volumes list, which also creates a corresponding LUN that you’ll notice is offline by default:

Bring the new LUN online and then you will be able to present it to your DR ESX hosts:

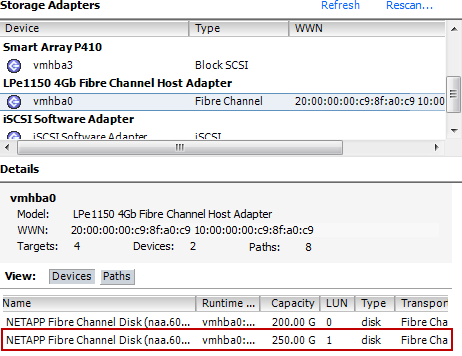

The LUN should instantly appear on the ESX hosts in your iGroup but if it does not run a rescan on the appropriate storage adapter:

ESX sees the LUN, now it needs to be added to the cluster. Switch to the Storage page in vCenter and select “add storage.” Select the new disk and be sure to select “Assign a new signature” to the disk or ESX will not be able to write to it! Only a new UUID is written, all existing data will be preserved.

The new LUN will now be accessible to all ESX hosts in the cluster. Note the default naming convention used after adding the LUN:

This is where things get REALLY manual and you wish you ponied that $25k for SRM. Browse the newly mounted datastore and register each VM you need to test, one by one.

Now your organization’s policies take over as to how far you need to go to satisfy a successful DR test. Once the VM is registered it can be powered on, but if it’s counterpart is still running in production disable its vNIC first. If you need to go further, then shut down the production VM, re-IP the DR clone and then bring it online. If you need to have users connect to the DR clone then there are other implications to consider such as DNS, DFS, ODBC pointers, etc. When your test is complete, power off all DR VM clones, dismount the datastore from within ESX, delete the FlexClone Volume on the DR Filer, bring the production VMs back up, check DNS, done. A beautiful thing and best of all the prod—>DR mirror is still in sync!

References:

0 Comments