One of the cool new features introduced in Server 2012 is the ability to team your NICs natively which is fully supported by Microsoft, without using any vendor-specific drivers or software. OEM driver-based teaming solutions have been around for much longer but have never been supported by Microsoft and are usually the first thing asked to be disabled if you ever call for PSS support. Server 2012 teaming is easy to configure and can be used for simple to very complex networking scenarios, converged or non. In this post I’ll cover the basics of teaming with convergence options having a focus around Hyper-V scenarios. A quick note on vernacular: Hyper-V host, parent, parent partition, or management OS all refer to essentially the same thing. Microsoft likes to refer to the network connections utilized by the hyper-V host as the “management OS” in the system dialogs.

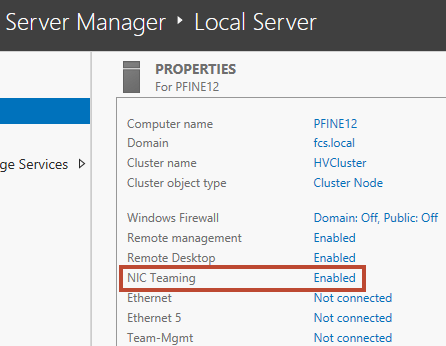

100% of the teaming configuration can be handled via PowerShell with certain items specifically requiring it. Basic functionality can be configured via the GUI which is most easily accessed from the Local Server page in Server Manager:

The NIC Teaming app isn’t technically part of Server Manager but it sure looks and feels like it. Click New Team under the tasks drop down of the Teams section:

Name the team, select the adapters you want to include (up to 32) then set the additional properties. Switch independent teaming mode should suit the majority of use cases but can be changed to static or LACP mode if required. Load balancing mode options consists of address hash or Hyper-V port. If you intend to use this team within a Hyper-V switch, make sure the latter is selected. Specifying all adapters as active should also serve the majority of use cases but a standby adapter can be specified if required.

Click ok and your new team will appear within the info boxes in the lower portion of the screen. Additional adapters can be added to an existing team at any time.

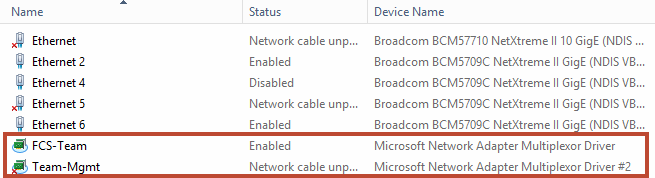

Every team and vNIC you create will be named and enabled via the Microsoft Network Adapter Multiplexor Driver. This is the device name that Hyper-V will see so name your teams intuitively and take note of which driver number is assigned to which team (multiplexor driver #2, etc).

In the Hyper-V Virtual Switch Manager, under the external network connection type you will see every physical NIC in the system as well as a multiplexor driver for every NIC team. Checking the box below the selected interface does exactly what it suggests: shares that connection with the management OS (parent).

VLANs (non Hyper-V)

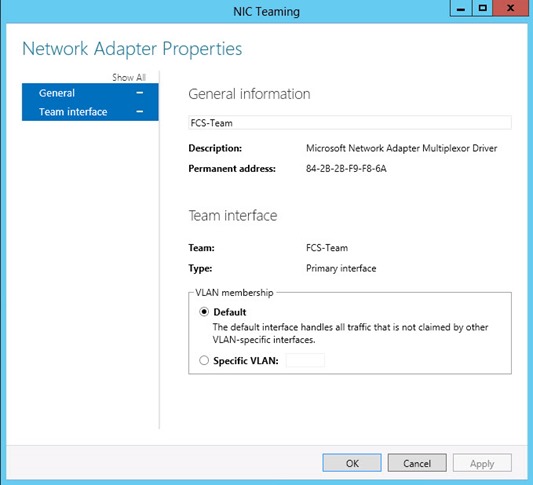

VLANs can be configured a number of different ways depending on your requirements. The highest level of methods can be configured in the NIC team itself. From within the Adapters and Interfaces dialog, click the Team Interfaces tab, then right click the team you wish to configure.

Entering a specific VLAN will limit all traffic on this team accordingly. If you intend to use this team with a Hyper-V switch DO NOT DO THIS! It will likely cause confusion and problems later. Leave any team to be used in Hyper-V in default mode with a single team interface and do your filtering within Hyper-V.

Team Interfaces can also be configured from this dialog which will create vNICs tied to a specific VLAN. This can be useful for specific services that need to communicate on a dedicated VLAN not part of Hyper-V. First select the team on the left, then right and the Add Interface drop down item will become active.

Name the interface and set the intended VLAN. Once created these will also appear as multiplexor driver devices in the network connections list. They can then be assigned IP addresses the same as any other NIC. vNICs created in this manner are not intended for use in Hyper-V switches!

VLANS (Hyper-V)

For Hyper-V VLAN assignments, the preferred method is to let the Hyper-V switch perform all filtering. This varies a bit depending on if you are assigning management VLANs to the Hyper-V parent or to guest VMs. For guest VMs, VLAN IDs should be specified within vNICs connected to a Hyper-V port. If multiple VLANs need to be assigned, add multiple network adapters to the VM and assign VLAN IDs as appropriate. This can also be accomplished in PowerShell using the Set-VMNetworkAdapterVlan command.

NIC teaming can also be enabled within a guest VM by configuring the advanced feature item within the VM’s network adapter settings. This is an either/ or scenario, guest vNICs can be teamed if there is no teaming in the Management OS.

Assigning VLANs to interfaces used by the Hyper-V host can be done a couple of different ways. Most basically, if your Hyper-V host is to share the team or NIC of a virtual switch this can be specified in the Virtual Switch Manager for one of your external vSwitches. Optionally a different VLAN can be specified for the management OS.

Converged vs Non-converged

Before I go much further on carving VLANs for the management OS out of Hyper-V switches, let’s look at a few scenarios and identify why we may or may not want to do this. Thinking through some of these scenarios can be a bit tricky conceptually, especially if you’re used to working with vSphere. In ESXi all traffic sources and consumers go through a vSwitch, in all cases. ESXi management, cluster communications, vMotion, guest VM traffic…everything. In Hyper-V you can do it this way, but you don’t have to, and depending on how or what you’re deploying you may not want to.

First let’s look at a simple convergence model. This use case applies to a clustered VDI infrastructure with all desktops and management components running on the same set of hosts. 2 10Gb NICs configured in a team, all network traffic for both guest VMs and parent management will traverse the same interfaces, storage traffic split out via NPAR to MPIO drivers. The NICs are teamed for redundancy, guest VMs attach to the vSwitch and the management OS receives weighted vNICs from the same vSwitch. Once a team is assigned to a Hyper-V switch, it cannot be used by any other vSwitch.

Assigning vNICs to be consumed by the management OS is done via PowerShell using the Add-VMNetworkAdapter command. There is no way to do this via the GUI. The vNIC is assigned to the parent via the ManagementOS designator, named whatever you like and assigned to the virtual switch (as named in Virtual Switch Manager).

Once the commands are successfully issued, you will see the new vNICs created in the network connections list that can be assigned IP addresses and configured like any other interface.

You can also see the lay of the land in PowerShell (elevated) by issuing the Get-VMNetworkAdapter command.

Going a step further, assign VLANs and weighted priorities for the management OS vNICs:

Set-VMNetworkAdapterVlan -ManagementOS -VMNetworkAdapterName "Cluster" -Access -VlanId 25

Set-VMNetworkAdapterVlan -ManagementOS -VMNetworkAdapterName "Live Migration" -Access -VlanId 50

Set-VMNetworkAdapterVlan -ManagementOS -VMNetworkAdapterName "Management" -Access -VlanId 10Set-VMNetworkAdapter -ManagementOS -Name "Cluster" -MinimumBandwidthWeight 40

Set-VMNetworkAdapter -ManagementOS -Name "Live Migration" -MinimumBandwidthWeight 20

Set-VMNetworkAdapter -ManagementOS -Name "Management" -MinimumBandwidthWeight 5

For our second scenario let’s consider a non-converged model with 6 NICs. 2 for iSCSI storage, 2 for the Hyper-V switch and 2 for the management OS. The NICs used to access any Ethernet based storage protocol traffic should not be teamed, let MPIO take care of this. The NIC team used by the Hyper-V switch will not share with any other host function, the same goes for the management team.

This is a perfectly acceptable method of utilizing more NICs on a Hyper-V host if you haven’t bought into the converged network models. Not everything must go through a vSwitch if you don’t want it to. Flexibility is good but this is the point that may confuse folks. vNICs dedicated to the management OS attached to the Mgmt NIC team can be carved out via PowerShell or via the Teaming GUI as Team Interfaces. Since these interfaces will exist and function outside of Hyper-V, it is perfectly acceptable to configure them in this manner. Storage traffic should be configured using MPIO and not NIC teaming.

The native Server 2012 NIC teaming offering is powerful and a much welcomed addition to the Server software stack. There are certainly opportunities for Microsoft to enhance and simply this feature further by adding best practices gates to prevent misconfiguration. The bandwidth weighting calculations could also be made simpler and more in line with WYSIWYG. Converged or non, there are a number of ways to configure teaming in Server 2012 to fit any use case or solution model with an enhanced focus paid to Hyper-V.

0 Comments