Peter Fine here from Dell CCC Solution Engineering, where we just finished an extensive refresh for our XenApp recommendation within the Dell Wyse Datacenter for Citrix solution architecture. Although not called “XenApp” in XenDesktop versions 7 and 7.1 of the product, the name has returned officially for version 7.5. XenApp is still architecturally linked to XenDesktop from a management infrastructure perspective but can also be deployed as a standalone architecture from a compute perspective. The best part of all now is flexibility. Start with XenApp or start with XenDesktop then seamlessly integrate the other at a later time with no disruption to your environment. All XenApp really is now, is a Windows Server OS running the Citrix Virtual Delivery Agent (VDA). That’s it! XenDesktop on the other hand = a Windows desktop OS running the VDA.

Architecture

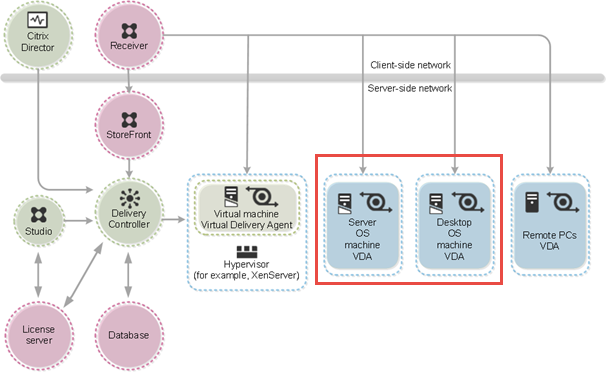

The logical architecture depicted below displays the relationship with the two use cases outlined in red. All of the infrastructure that controls the brokering, licensing, etc is the same between them. This simplification of architecture comes as a result of XenApp shifting from the legacy Independent Management Architecture (IMA) to XenDesktop’s Flexcast Management Architecture (FMA). It just makes sense and we are very happy to see Citrix make this move. You can read more about the individual service components of XenDesktop/ XenApp here.

Expanding the architectural view to include the physical and communication elements, XenApp fits quite nicely with XenDesktop and compliments any VDI deployment. For simplicity, I recommend using compute hosts dedicated to XenApp and XenDesktop, respectively, for simpler scaling and sizing. Below you can see the physical management and compute hosts on the far left side with each of their respective components considered within. Management will remain the same regardless of what type of compute host you ultimately deploy but there are several different deployment options. Tier 1 and tier 2 storage are comprehended the same way when XenApp is in play, which can make use of local or shared disk depending on your requirements. XenApp also integrates nicely with PVS which can be used for deployment and easy scale out scenarios. I have another post queued up for PVS sizing in XenDesktop.

From a stack view perspective, XenApp fits seamlessly into an existing XenDesktop architecture or can be deployed into a dedicated stack. Below is a view of a Dell Wyse Datacenter stack tailored for XenApp running on either vSphere or Hyper-v using local disks for Tier 1. XenDesktop slips easily into the compute layer here with our optimized host configuration. Be mindful of the upper scale utilizing a single management stack as 10K users and above is generally considered very large for a single farm. The important point to note is that the network, mgmt and storage layers are completely interchangeable between XenDesktop and XenApp. Only the host config in the compute layer changes slightly for XenApp enabled hosts based on our optimized configuration.

Use Cases

There are a number of use cases for XenApp which ultimately relies on Windows Server’s RDSH role (terminal services). The age-old and most obvious use case is for hosted shared sessions, i.e. many users logging into and sharing the same Windows Server instance via RDP. This is useful for managing access to legacy apps, providing a remote access/ VPN alternative, or controlling access to an environment through which can only be accessed via the XenApp servers. The next step up naturally extends to application virtualization where instead of multiple users being presented with and working from a full desktop, they simply launch the applications they need to use from another device. These virtualized apps, of course, consume a full shared session on the backend even though the user only interacts with a single application. Either scenario can now be deployed easily via Delivery Groups in Citrix Studio.

XenApp also compliments full XenDesktop VDI through the use of application off-load. It is entirely viable to load every application a user might need within their desktop VM, but this comes at a performance and management cost. Every VDI user on a given compute host will have a percentage of allocated resources consumed by running these applications which all have to be kept up to date and patched unless part of the base image. Leveraging XenApp with XenDesktop provides the ability to off-load applications and their loads from the VDI sessions to the XenApp hosts. Let XenApp absorb those burdens for the applications that make sense. Now instead of running MS Office in every VM, run it from XenApp and publish it to your VDI users. Patch it in one place, shrink your gold images for XenDesktop and free up resources for other more intensive non-XenApp friendly apps you really need to run locally. Best of all, your users won’t be able to tell the difference!

Optimization

We performed a number of tests to identify the optimal configuration for XenApp. There are a number of ways to go here: physical, virtual, or PVS streamed to physical/ virtual using a variety of caching options. There are also a number of ways in which XenApp can be optimized. Citrix wrote a very good blog article covering many of these optimization options, of which most we confirmed. The one outlier turned out to be NUMA where we really didn’t see much difference with it turned on or off. We ran through the following test scenarios using the core DWD architecture with LoginVSI light and medium workloads for both vSphere and Hyper-V:

- Virtual XenApp server optimization on both vSphere and Hyper-V to discover the right mix of vCPUs, oversubscription, RAM and total number of VMs per host

- Physical Windows 2012 R2 host running XenApp

- The performance impact and benefit of NUMA enabled to keep the RAM accessed by a CPU local to its adjacent DIMM bank.

- The performance impact of various provisioning mechanisms for VMs: MCS, PVS write cache to disk, PVS write cache to RAM

- The performance impact of an increased user idle time to simulate a less than 80+% concurrency of user activity on any given host.

To identify the best XenApp VM config we tried a number of configurations including a mix of 1.5x CPU core oversubscription, fewer very beefy VMs and many less beefy VMs. Important to note here that we based on this on the 10-core Ivy Bridge part E5-2690v2 that features hyperthreading and Turbo boost. These things matter! The highest density and best user experience came with 6 x VMs each outfitted with 5 x vCPUs and 16GB RAM. Of the delivery methods we tried (outlined in the table below), Hyper-V netted the best results regardless of provisioning methodology. We did not get a better density between PVS caching methods but PVS cache in RAM completely removed any IOPS generated against the local disk. I’ll got more into PVS caching methods and results in another post.

Interestingly, of all the scenarios we tested, the native Server 2012 R2 + XenApp combination performed the poorest. PVS streamed to a physical host is another matter entirely, but unfortunately we did not test that scenario. We also saw no benefit from enabling NUMA. There was a time when a CPU accessing an adjacent CPU’s remote memory banks across the interconnect paths hampered performance, but given the current architecture in Ivy Bridge and its fat QPIs, this doesn’t appear to be a problem any longer.

The “Dell Light” workload below was adjusted to account for less than 80% user concurrency where we typically plan for in traditional VDI. Citrix observed that XenApp users in the real world tend to not work all at the same time. Less users working concurrently means freed resources and opportunity to run more total users on a given compute host.

The net of this study shows that the hypervisor and XenApp VM configuration matter more than the delivery mechanism. MCS and PVS ultimately netted the same performance results but PVS can be used to solve other specific problems if you have them (IOPS).

* CPU % for ESX Hosts was adjusted to account for the fact that Intel E5-2600v2 series processors with the Turbo Boost feature enabled will exceed the ESXi host CPU metrics of 100% utilization. With E5-2690v2 CPUs the rated 100% in ESXi is 60000 MHz of usage, while actual usage with Turbo has been seen to reach 67000 MHz in some cases. The Adjusted CPU % Usage is based on 100% = 66000 MHz usage and is used in all charts for ESXi to account for Turbo Boost. Windows Hyper-V metrics by comparison do not report usage in MHz, so only the reported CPU % usage is used in those cases.

** The “Dell Light” workload is a modified VSI workload to represent a significantly lighter type of user. In this case the workload was modified to produce about 50% idle time.

†Avg IOPS observed on disk is 0 because it is offloaded to RAM.

Summary of configuration recommendations:

- Enable Hyper-Threading and Turbo for oversubscribed performance gains.

- NUMA did not show to have a tremendous impact enabled or disabled.

- 1.5x CPU oversubscription per host produced excellent results. 20 physical cores x 1.5 oversubscription netting 30 logical vCPUs assigned to VMs.

- Virtual XenApp servers outperform dedicated physical hosts with no hypervisor so we recommend virtualized XenApp instances.

- Using 10-Core Ivy Bridge CPUs, we recommend running 6 x XenApp VMs per host, each VM assigned 5 x vCPUs and 16GB RAM.

- PVS cache in RAM (with HD overflow) will reduce the user IO generated to disk almost nothing but may require greater RAM densities on the compute hosts. 256GB is a safe high water mark using PVS cache in RAM based on a 21GB cache per XenApp VM.

Resources:

Dell Wyse Datacenter for Citrix – Reference Architecture

0 Comments