HTPC build for Plex three part series:

- Hardware build and Storage Spaces

- Plex server and clients

- Performance

I’ve had a single disk Home Theater PC (HTPC) running Plex for a while now but with my ReadyNas nearing the end of its useful life, I decided to kill two birds with one stone: build an updated HTPC with drive redundancy to also take over the functions of my aging NAS. My HTPC stays on 24/7 anyway so this makes perfect sense. My design goals for this were to build a small, quiet, low power, data redundant and sufficiently performing box that I can stuff into my TV cabinet. The extent of the duties for this HTPC will be video streaming and file sharing/ backup, no gaming. This post details my build, Plex server, Plex clients, Windows Storage Spaces, as well as the performance of the storage subsystem.

HTPC Build

Trying to keep my costs in line while building in performance where it mattered, I decided to go with an AMD-based mini-ITX (m-ITX) form factor system running Windows 8.1 x64. The motherboard and CPU were sourced a year ago but still provide more than adequate performance for my purposes. I went a little higher on the CPU than I really needed to but since video transcoding is very CPU intensive, more horsepower there is certainly not wasted. All parts were sourced from Amazon, who has officially conquered the PC parts space, New Egg can no longer compete (price or return policy). A decent 2-bay ReadyNas or Synology NAS will set you back at least this much, plus this way I get a full featured box without compromises.

- CPU – AMD A4-5300 FM2, dual core, 3.4GHz, 65w, $50

- MOBO – MSI FM2-A75IA-E53, $100

- Case – Cooler Master Elite 130, $50

- RAM – Corsair 8GB DDR3/1333 Memory, $60

- PSU – Silverstone SFX 450w, $70

- Disk – 1 x Crucial M500 120GB SSD (OS), $72

– 3 x WD Red 3TB (spaces), $122 each

Total: $768

This A-series 65w AMD APU (Trinity) includes a robust dual-core clocked at 3.6GHz with on-die Radeon HD 7480D for graphics. The RAM isn’t anything special, just trusty Corsair 4GB DIMMs clocked at 1333MHz. The MSI main board provides all the connections I need and pairs nicely with the Cooler Master case. 4 x SATA ports, 2 x DIMM slots, and a PCIe slot should I ever choose to drop in a full length GPU.

I had originally spec’d this build using the WD GP-AV disks which are purpose built for DVRs, security systems and 24/7 streaming operation. This was the disk I used originally a year ago which has served me faithfully and quietly. Online benchmarks show this drive to perform higher than the Green and Red drives from WD. Due to a parts sourcing issue I was forced to look for alternatives and landed on the WD Red disks, which are actually a bit cheaper. Low noise, low power consumption, built for 24/7 NAS duty… these drives are very favorably reviewed. The WD Reds are no where near the highest performing HDDs on the market, with a rotational speed of ~5400 RPM, but for the purposes of an HTPC/ NAS, their performance is perfectly sufficient.

Quality SSDs are cheaper than ever now and make a great choice for your OS disk when you separate your data (as you always should). These disks are capable of thousands of IOPS and make install, boot up and OS operations lightning quick.

For power I once again turned to Silverstone who have a 450w 80 PLUS bronze efficient unit in SFX form factor. Plenty of power with a very minimal foot-print which is ideal when working with m-ITX. This non-modular PSU has only 3 SATA connectors on a single string so I used a Molex to SATA power connector for my 4th drive. This PSU provides plenty of juice for these power sipping components and doesn’t block the CPU fan.

Finding the “perfect” case for whatever project can prove challenging. For this project I wanted a small m-ITX case that can house 3 x 3.5” HDDs + a 2.5” SSD and Cooler Master had the answer. Well ventilated with plenty of working room, support for a full length GPU and full size PSU. You can mount a BD-ROM if you like or use that space for a 3.5” HDD. The finish is matte black inside and out with all rounded corners and edges. My only gripe is the included front case fan which, although extremely quiet, has no protection grill on its backside. You have to be very careful with you wires as there is nothing to stop them from direct contact with the fan blades. With the one-piece cover removed there is loads of working room in this case. With four SATA connections, cabling gets a bit tight on the rear side but it’s completely manageable.

With the Elite 130 case loaded you can see the three WD Red drives, one on the floor, one on the wall and one on the top in the 5.25 bay. The SSD is mounted below the 5.25 bay, you can see the grey-beige rear of its case.

The SFX PSU sticks out the back of the case a few inches which provides even greater clearance for the CPU fan. With this case it did require using the ATX bracket to fit perfectly. Both the CPU and PSU fans are completely unobstructed with fresh air brought in by the 120mm front fan. This case comes with an 80x15mm fan mounted on the side by the SATA ports. I found this fan to be noisy and in the way, so removed it.

When all was said and done, power consumption for this build is at 29w idle and up around 75w under load. Awesome!!! See the performance section at the bottom for more details.

Storage Spaces

First debuted in Windows 8/ Server 2012, Storage Spaces provides a new very interesting method for controlling storage. Part of the “software defined” market segment, Spaces supplants traditional software and hardware RAID by providing the same data protection capability with a ton of additional flexibility. The biggest value propositions for Spaces in Windows 8.1 is the ability to thin provision (create drives with more space than you actually have or need), use disparate drive media (create a pool from several different disks, internal and external USB) and choose from a few different resiliency methods to control performance or provide greater redundancy. Server 2012 R2 adds a few additional features to Spaces, namely the ability to provide performance tiering between SSDs and HDDs and post-process deduplication which can have a dramatic reduction on consumed capacity. At the end of this exercise I will have two Spaces called Docs and Media both with 2-way mirror resiliency.

Setting up Spaces in Windows 8.1 is a trivial affair once the drives have been physical added to your build. One of the only restrictions is that the disk where the OS is installed cannot be used in a Spaces pool. First open the Spaces management console by pressing Windows Key + Q and typing spaces.

Click create a new pool and select the unformatted disks you want to include. Additional disks can be easily added later.

Storage Spaces (aka virtual disks) can now be created within the physical disk pool and will be presented back to the OS as logical drives with drive letters. Give the new Space a name (for me I have functionally separated Spaces for Docs and Media), choose a drive letter and select a file system. Resiliency type will open an additional file system option called ReFS for mirrored Spaces which is the recommended file system for these Spaces types. NTFS will be the only option for all others. ReFS builds on NFTS by providing greater data integrity including self healing, prioritized data availability, high scalability and app compatibility. Here is a quick breakdown of the available resiliency types:

- Simple (no resiliency) – just like it says, only one copy of your data so offers no protection. This is akin to RAID0 and can be useful if you simply want to thin provisioning a large logical drive with no data protection.

- Two-way mirror – writes two copies of your data on different physical disks thus protecting from a single disk failure. This requires a minimum of two disks.

- Three-way mirror – writes three copies of your data on different physical disks thus protecting you from two simultaneous disk failures. This requires a minimum of five disks.

- Parity – writes your data with additional parity information for recovery, similar to RAID5. This protects from a single disk failure and requires a minimum of three drives. Parity Spaces will give you more available capacity but at the cost of reduced IO performance. See the performance section at the end for more details.

The images below show the capacity impact of resiliency using a two-way mirror vs parity. The two-way mirror mode will consume 2x of the stated capacity for every file stored (50% reduction of available capacity). All spaces can be thin provisioned and adjusted later if need be. As you can see below, a 2TB logical Space requires 4TB of actual storage should it fill completely.

Parity will consume 1.5x of the stated capacity for every file stored. Based on the 2TB logical Space shown below, if that volume were to fill completely it would consume 3TB of total available capacity.

Once a Space has been created only the size and drive letter can be changed. If you wish to change resiliency type for a space, you’ll have to create a new Space and migrate your data to it.

New drives can be added later to an existing pool very easily and with no down time. Note that adding a drive to an existing Storage Space will destroy any data currently on that drive.

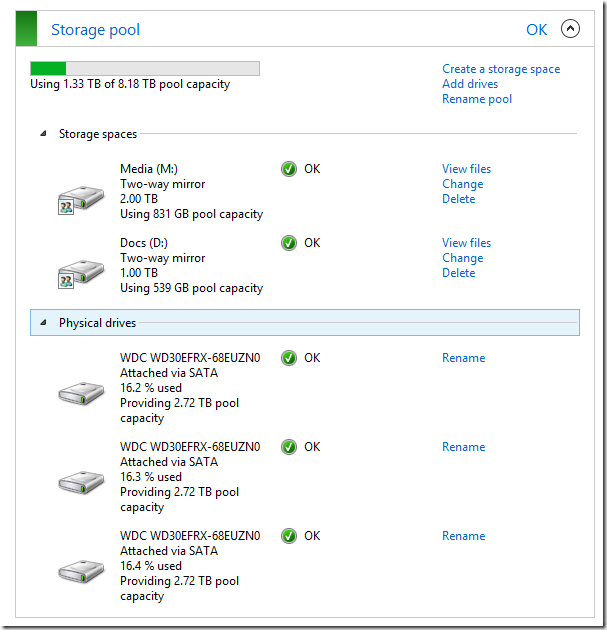

Once configured, you will have a view of your logical Spaces, consumption stats as well as the physical disks backing them in the pool. Here you can see my three disk pool and two Spaces, each with a two-way mirror. Notice that the actual data consumed on the physical disks is evenly spread across all three. Having tried both Parity and two-way mirror resiliency, I decided to take the capacity hit and use the mirror method on both since it performs much better than Parity. Once finalized, I moved all of my pictures and videos into the new M drive.

This is reflected in Windows File Explorer with the two new logical drives and their individual space consumption levels.

Slabs and Columns

Underneath it all Microsoft has a few interesting concepts, some customizable which can be used to achieve certain results. I’ve linked to many good information sources in my references section at the bottom if you’d like more info on these concepts. Data that is mirrored is done so in 256MB slabs which is what is actually copied to each physical disk in a two-way mirror. Half of each slab is allocated to two separate disks. In the event of a disk failure, Spaces will self heal by identifying all affected slabs that were on the failed disk and reallocate them to any suitable remaining disk in the pool. You can take a peek under the covers if you’re curious by running the optimize-volume –verbose command in PowerShell:

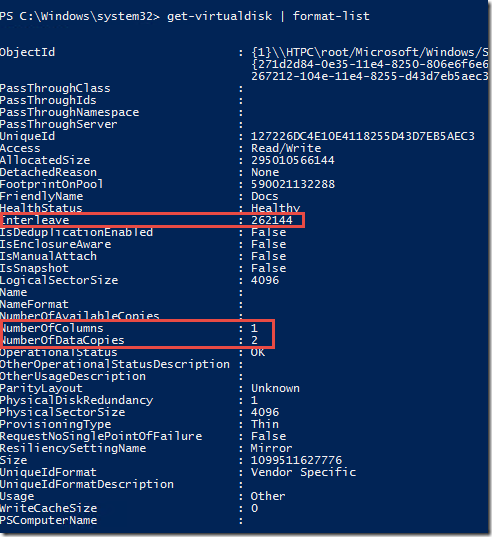

Another important concept in Storage Spaces is that of columns which define the number of underlying physical disks across which one stripe of data for a virtual disk is written. This takes a minute to wrap the head around. By default, Spaces creates each virtual disk with one column when there are two disks present. So one column means one stripe on two disks. The interleave value dictates how much data is written to a single column per stripe, by default 256KB. For larger JBODs with dozens of disks the column count can go as high as eight. In my case only having three disks, one column is fine. Two columns makes sense if you have four disks, for example. Generally speaking, more columns means higher performance. The downside of more columns is that this controls how you will scale out later. A two column virtual disk will require you to expand your storage pool by two physical disks at a time. Creating a virtual disk with more columns or a different interleave is done so in PowerShell using the New-VirtualDisk cmdlet. You can use the get-virtual disk cmdlet to view the lay of the land for your Spaces:

In the end I have met all my initial goals around cost, size, noise, performance and power consumption. I’m very pleased with this mix of hardware, especially my alternate WD Red HDD choice. Spaces provides a robust and flexible subsystem which is a great alternative to legacy hardware/ software RAID. For more information about the inner workings of Spaces and additional design considerations please see the references section below. Please see parts two and three of this series for more information on Plex and the performance of this build.

0 Comments