Our latest addition to the wildly popular Dell XC Web-Scale Converged Appliance series powered by Nutanix, is the XC730 for graphics. The XC730 is a 2U node outfitted with 1 or 2 NVIDIA GRID K1 or K2 cards suited for highly scalable and high-performance graphics within VDI deployments. The platform supports either Hyper-V with RemoteFX or VMware vSphere 6 with NVIDIA GRID vGPU. Citrix XenDesktop, VMware Horizon or Dell Wyse vWorkspace can be deployed on the platform and configured to run with the vGPU offering. Being a graphics platform, the XC730 currently supports up to 2 x 120w 10, 12, or 14-core Haswell CPUs with 16 or 32GB 2133MHz DIMMs. The platform requires a minimum of 2 SSDs and 4 HDDs with a range of options within each tier. All XC nodes come equipped with a 64GB SATADOM to boot the hypervisor and 128GB total spread across the first 2 SSDs for the Nutanix Home. Don’t know what mix of components to choose or how to size your environment? No problem, we create optimized platforms and validate them to make your life easier.

Optimized High Performance Graphics

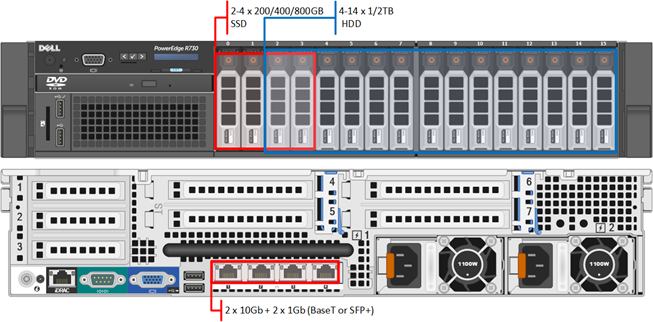

Our validated platform for the XC730 is a high-end “B7” variant that provides the absolute pinnacle of graphics performance in an HCI offer. 14-core CPUs, 256GB RAM, dual K2’s, 2 x 400GB SSDs and 4 x 1TB HDDs. This is all about graphics so we need more wattage and the XC730 comes equipped with dual 1100w PSUs. iDRAC8 comes standard in the XC Series as well as your choice of 10Gb SFP+ or BaseT Ethernet adapters. I chose this mix of components to provide a very high-end experience to support the maximum number of users allowed by the K2 vGPU profiles. Watch this space for additional optimized platforms designed to remove the guess of sizing graphics in VDI. Even when GPUs are present, the basic laws of VDI exist which state: thou shalt exhaust CPU first. Dual 14-core parts will ensure that doesn’t happen. Make sure to check out our Appliance Architectures at the bottom of this post for more detailed info.

NVIDIA GRID vGPU

The cream of the crop in server virtualized graphics comes courtesy of NVIDIA with the K1 and K2 Kepler-based boards. The K2 has 4x the CUDA cores of the K1 board with a slightly lower core clock and less total memory. The K2 is the board you want for fewer higher end users. The K1 is appropriate for a higher overall number of lower end graphical users. vGPU is where the magic happens as graphics are hardware-accelerated to the virtual environment running within vSphere. Each desktop VM within the vGPU environment runs a native set of the NVIDIA drivers and addresses a real slice of the server grade GPU. The graphic below from NVIDIA portrays this quite well.

(image courtesy of NVIDIA)

The magic of vGPU happens within the profiles that ultimately get assigned. Both K1 and K2 in conjunction with vGPU have a preset number of profiles that ultimately control the amount of graphics memory assigned to each desktop along with the total number of desktops that can be supported by each card. The profile pattern is K2xxx for K2 and K1xxx for K1, the smaller index identifying a great user density. The K280Q and K180Q are basically pass-through profiles where an entire physical GPU is passed through to a desktop VM. You’ll notice how the densities change per card and per GPU depending on how these profiles are assigned. Lower performance = greater densities with up to 64 total users possible on an XC730 with dual K1 cards. In the case of the K2, the maximum number of users possible on a single XC730 with dual cards is 32.

So how does it fit?

When designing a solution using the XC730, all of the normal Nutanix and XC rules apply including a minimum of 3 nodes in a cluster and a CVM running on every node. Since the XC730 is a graphics appliance it is recommended to have additional XC630s in the architecture to run the VDI infrastructure management VMs as well as for the users not requiring high-performance graphics. Nothing will stop you from running mgmt infa on your XC730 nodes but financial practicality will probably dictate that you use XC630s for this purpose. Scale of either is unlimited.

It is entirely acceptable (and expected) to mix XC730 and XC630 nodes within the same NDFS cluster. The boundaries you will need to draw will be around your vSphere HA clusters separating each function into a discrete cluster up to 64 nodes, as depicted below. This allows each discrete layer to scale independently while providing dedicated N+1 HA for each function. Due to the nature of graphics virtualization, vMotion is not currently supported neither is automated HA VM restarts when GPUs are attached. HA in this particular scenario would be cold should a node in the cluster fail. Using pooled desktops and mobilizing user data, this should only equate to a minor inconvenience worst case.

As is the case with any Nutanix deployment, very large NDFS clusters can be created with multiple hypervisor pods created within a singular namespace. Below is an example depiction of a 30K user deployment within a single site. Each compute pod is composed of a theoretical maximum number of users within a VDI farm, serviced by a dedicated pair of mgmt nodes for each farm. Mgmt is separated into a discrete cluster for all farms and compute is separated per the HA maximum. This is a complicated architecture but demonstrates the capabilities of NDFS when designing for a very large scale use case.

This is just the tip of the iceberg! For more information on the XC series architecture, platforms, Nutanix, test results of our XC730 platform and more, please see our latest Appliance Architectures which include the XC730:

0 Comments