Be sure to also check out: Hyper-V Design for NUMA Architecture and Alignment: Link

Non-Uniform Memory Access (NUMA) has been with us for awhile now and was created to overcome the scalability limits of the Symmetric Multi-Processing (SMP) CPU architecture. In SMP, all memory access was tied to a singular shared physical bus. There are obvious limitations in this design, especially with higher CPU counts and increased traffic on the bus.

What is NUMA alignment?

In the case of virtualization, VMs created on a physical server receive their vCPU entitlements from the physical CPU cores of their hosts (um, obviously). There are many tricks used by hypervisors to oversubscribe CPU and run as many VMs on a host as possible while ensuring good performance. Better utilization of physical resources = better return on investment and use of the company dollar. To design your virtual environment to be NUMA aligned means ensuring that your VMs receive vCPUs and RAM tied to a single physical CPU (pCPU), thus ensuring the memory and pCPU cores they access are directly connected and not accessed via traversal of a CPU interconnect (QPI). As fast as interconnects are these days, they are still not as fast as the connection between a DIMM and pCPU. Ensuing that your virtual environments are NUMA aligned means ensuring the highest possible performance.Running many smaller 1 or 2 vCPU VMs with lower amounts of RAM, such as virtual desktops, aren’t as big of a concern and we generally see very good oversubscription at high performance levels with less need to span NUMA. In the testing my team does at Dell for VDI architectures, we see somewhere between 9-12 vCPUs per pCPU core depending on the workload and VM profile. For larger server-based VMs, such as those used for RDSH sessions, this ratio is smaller and more impactful because there are fewer overall VMs consuming a greater amount of resources. In short, NUMA alignment is much more important when you have fewer total VMs with larger vCPU and RAM allocations.

To achieve sustained high performance for a dense server workload, it is important to consider:

- The number of vCPUs assigned to each VM within a NUMA node to avoid contention.

- The amount RAM assigned to each VM to ensure that all memory access remains local within a physical NUMA node.

vNUMA in vSphere

In vSphere 5.0 and later, the virtual NUMA topology can be exposed to the guest OS as long as it is hardware version 8 or later. vNUMA is enabled by default if a VM is configured with more than 8 total vCPUs (or 4 vCPU cores + 2 cores per NUMA node) and will match the host where it was powered on until the VM is restarted. This minimum number can be adjusted if desired as shown later.When the NUMA topology is exposed to a guest VM, one of two things happen via the ESXi NUMA scheduler:

- If a VM is assigned a vCPU value less than or equal to the number of total logical cores in a physical NUMA node, then this VM will be assigned to a single physical NUMA node and consume memory/ cores local to that node (ideal). This remains true until that VM is configured to access more memory than what is available in the single physical NUMA node, which would result in the VM spanning to the remote NUMA node (less than ideal).

- If a VM is assigned more vCPUs than cores that exist in a single physical NUMA node, it will be assigned to at least 2 physical NUMA nodes. This will satisfy the resource requirements of the VM but will come with a performance penalty as the VM spans local and remote NUMA nodes (less than ideal).

The NUMA scheduler assigns each VM to a “home node” at boot time which is a defined physical NUMA node within the server. ESXi tracks NUMA nodes within the System Resource Allocation Table (SRAT). When calculating a VM's NUMA home node, hyperthreading is ignored. So if your VM is configured with more vCPUs that a single physical CPU has cores, it will be assigned to 2 or more NUMA home nodes. ESXi will ensure VM memory locality by locking a VM to run from the same home node that contains its assigned memory. If system load changes such that a VM’s home node is no longer optimal, ESXi can dynamically migrate a VM to a new home node. Part of this migration could also include a migration of the VM’s memory assignment to prevent spanning NUMA if possible. Important to note that if you set CPU affinity for a VM, this essentially disables the NUMA scheduler for that VM.

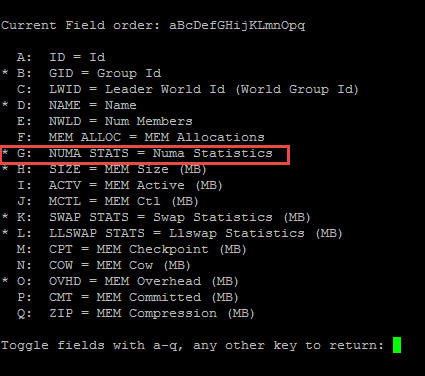

There are 2 ways you can verify how many NUMA nodes are present according to what ESXi sees, via esxtop or esxcli. From the console of one of your hosts run esxtop. Once running, press "m" to enable memory stats. Later, to see the NUMA stats as they apply to VMs, from esxtop press "f" to change the fields displayed, select NUMA Stats with "g" and hit any key to exit.

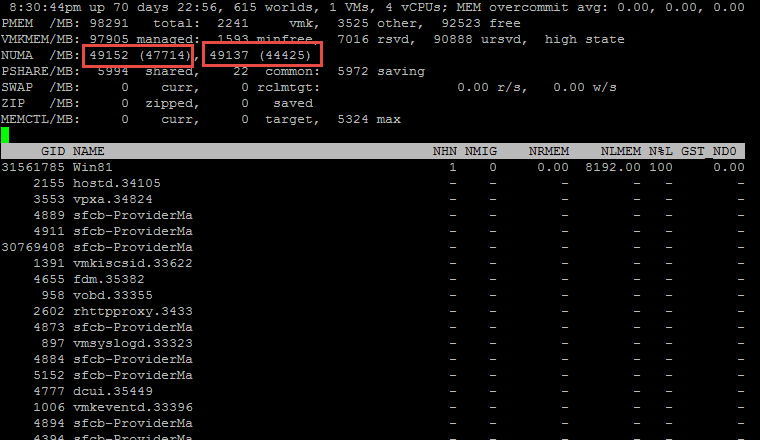

As you can now see, ESXi is aware of 2 physical NUMA nodes in my test system each with 49GB memory directly attached to each node.

The second method is the "easy" way but displays less information overall. Run the following esxcli command which also reveals that this host has 2 NUMA nodes:

esxcli hardware memory get | grep NUMA

Further, just to completely remove any doubt about the number of logical cores available and to which physical NUMA node they belong, consider the following output from esxcli. 12 logical CPUs assigned to each physical node.

esxcli hardware cpu list | grep Node

NUMA Alignment in vSphere

The basic principles of NUMA are the same when dealing with vSphere or Hyper-v however executed slightly differently from a VM perspective. My vSphere cluster shares the same specs of my Windows cluster:- 2 x Dell PowerEdge R610’s with dual X5670 6-core CPUs and 96GB RAM running vSphere 5.5 U2 in an HA Cluster. 24 total logical hyperthreaded processors as reported by ESXi.

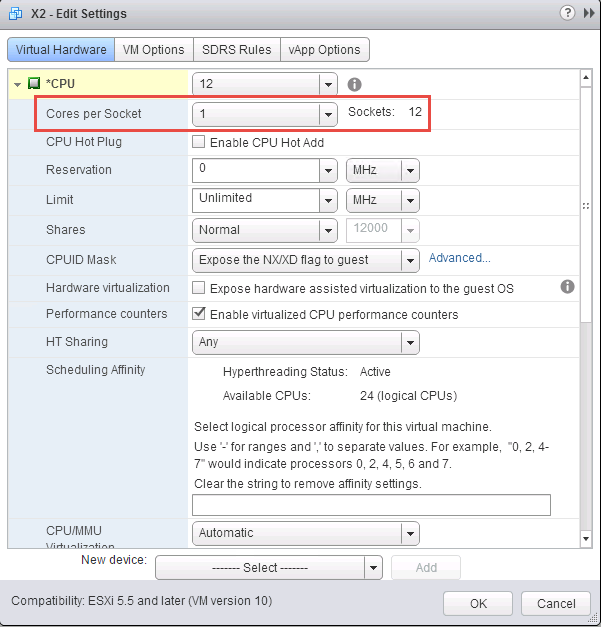

vNUMA can be altered on a VM by VM basis through vCPU assignments or via advanced host settings if global tweaks need to be made. It’s worth noting here that although the vSphere Client will allow you to assign CPU cores as well as “cores per socket” to your VMs, this is generally not required. VMware added this option in previous versions to provide the ability to control the number of sockets seen by the guest OS as a way to get around certain software restrictions and limitations that likely no longer apply. In vSphere a vCPU is a vCPU and will be scheduled according to how it fits into the exposed vNUMA topology. You can specify the cores per socket which will affect the NUMA topology presented to the VM, but also realize that this could have negative impacts as to how well that VM plays within the presented topology. Unless you have a good reason to change this, leave the default value of 1 core per socket when configuring VMs in ESXi. In my test servers a single VM can be configured with up to 24 vCPUs and if it's a Windows server OS, up to 24 sockets, which constitutes the maximum logical configuration of all available cores on this host. Take for example a Server 2012 R2 VM called "X2" configured with 12 vCPUs using the default of 1 core per socket.

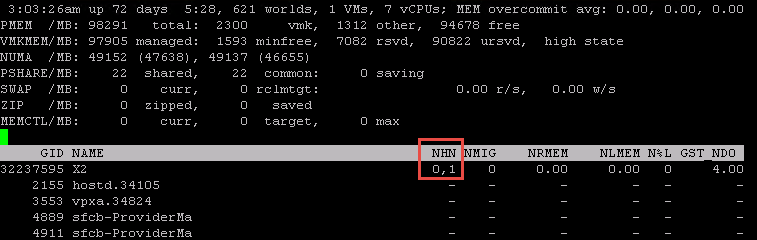

Because the number of vCPUs is >6, ESXi assigns this VM to both NUMA home nodes, shown here in esxtop via the NUMA Home Node (NHN) value. Remember that the NUMA Scheduler ignores the hyperthreaded cores when making this calculation:

Even though at 12 vCPUs this VM fits within the logical boundaries of a single physical NUMA node, this VM is assigned to more vCPUs than the 6 physical cores that exist in a single NUMA node, thus enabling ESXi to reserve the right to schedule this VM's CPU consumption on either NUMA node. If this VM were to be assigned 13 vCPUs, not only would it be assigned to both NUMA home nodes as we see above but it would span NUMA to fulfill it's operational requirements. Per the diagram below you can see that two similarly configured VMs fit within the constraints of each NUMA node from an assigned memory perspective, but because X2 is configured with more vCPUs than total logical cores that exist on one NUMA node, it must cross the QPIs to fully satisfy its entitlement.

Another example of vNUMA in action is the assignment of 24 vCPUs to this VM using an arbitrary 3 cores per socket. vNUMA creates 8 NUMA nodes (sockets) with 3 processors assigned to each.

The other example that would cause a VM to span NUMA would be if it were assigned to more memory than what exists within a single physical NUMA node. For this example I've reconfigured my X2 VM with 6 vCPUs to ensure CPU locality but assigned and reserved more memory than what exists within the NUMA home node to which it is assigned.

For NUMA spanning to actually take place the VM now has to actually consume more memory than what exists in the NUMA home node it was assigned. To do this I used a memory allocation tool and consumed 48GB of active memory. Hopping back over to esxtop we can see exactly the amount of memory being used locally (NLMEM) vs memory spanning to the remote NUMA node (NRMEM).

To illustrate what is happening here, X2 is utilizing memory from its assigned NUMA home node but is also having to access the remote NUMA node to store its memory pages. If the cluster didn't have enough resources available for the configuration of the VM, it would fail to power on.

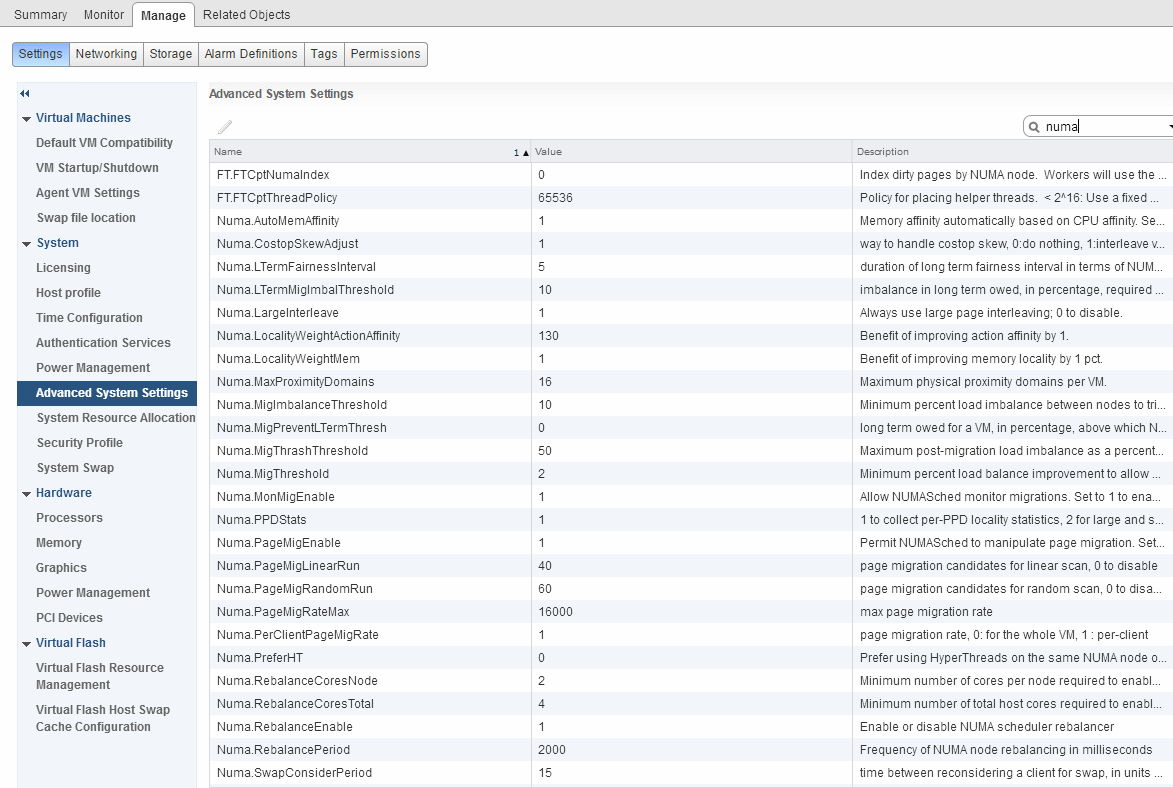

There are a number of additional tweaks available in the Advanced System Settings for a given host or at the individual VM level. Be extremely careful here and only change something if you have education and purpose.

Key Takeaways

- As a matter of general best practice, assign vCPU values to VMs less than or equal to the number of physical cores on a single NUMA node.

- Designing your virtual infrastructure to work in concert with the physical NUMA boundaries of your servers will net optimal performance and minimize contention.

- A single VM consuming more vCPUs than a single NUMA node contains, will be scheduled across multiple NUMA nodes causing possible CPU contention.

- A single or multiple VMs consuming more RAM than a single NUMA node contains will span NUMA and store a percentage of their pages in the the remote NUMA node causing reduced performance.

- In vSphere vNUMA is enabled by default for VMs with 8+ vCPUs (adjustable) but enabling "hot add CPU" or configuring CPU affinity will essentially disable this feature. Be careful if you do this.

- When the NUMA Scheduler calculates which NUMA home node to assign a VM to, hyperthreading is ignored. This calculation is based on the physical cores of the CPUs.

Resources:

http://lse.sourceforge.net/numa/faq/NUMA in ESXi

http://www.vmware.com/pdf/Perf_Best_Practices_vSphere5.5.pdf

https://blogs.vmware.com/vsphere/2013/10/does-corespersocket-affect-performance.html

http://pubs.vmware.com/vsphere-55/topic/com.vmware.ICbase/PDF/vsphere-esxi-vcenter-server-55-resource-management-guide.pdf

https://pubs.vmware.com/vsphere-60/topic/com.vmware.ICbase/PDF/vsphere-esxi-vcenter-server-60-resource-management-guide.pdf

0 Comments