Part 2: Prep & Configuration

Part 3: Performance & Troubleshooting

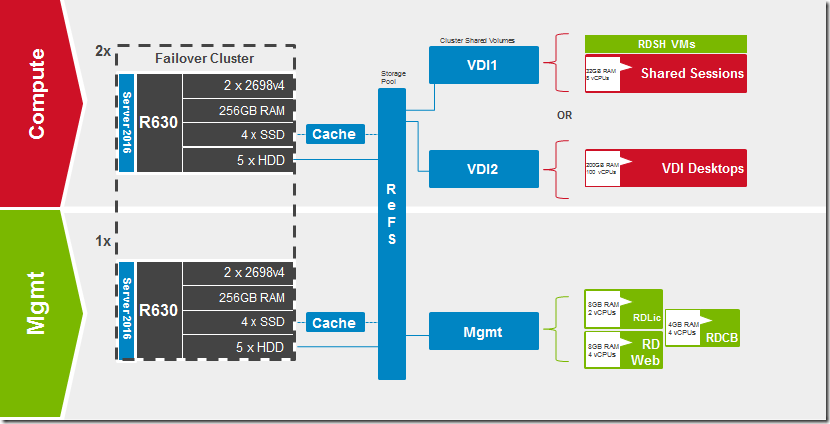

Make sure to check out my series on RDS in Server 2016 for more detailed information on designing and deploying RDSH or RDVH.

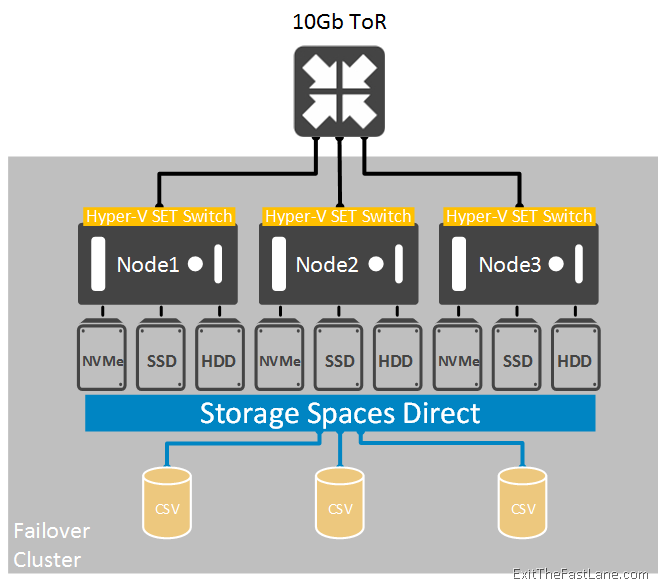

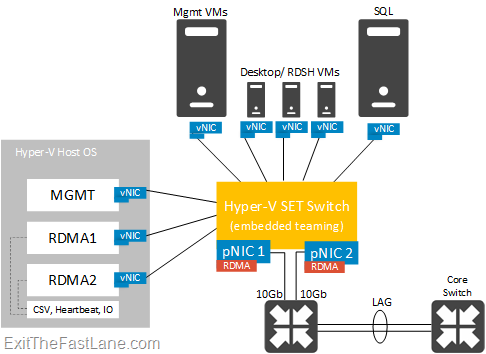

For Microsoft purists looking to deploy VDI or another workload on the native Microsoft stack, this is the solution for you! Storage Spaces Direct (S2D) is Microsoft’s new kernel-mode software-defined-storage model exclusive to Server 2016. In the last incarnation of Storage Spaces on Server 2012R2, JBODs were required with SAS cables running to each participating node that could then host SMB namespaces. No longer! Server 2016 Datacenter Edition, local disks and Ethernet (10Gb + RDMA recommended) are all you need now. The setup is executed almost exclusively in PowerShell which can be scripted, of course. Microsoft has positioned their first true HCI stack targeting the smaller side of SMB with a 16 node maximum scale. The S2D architecture runs natively on a minimum of 2 nodes configured in a Failover Cluster with 2-way mirroring.

NVMe, SSD or HDDs installed in each host are contributed to a 2-tier shared storage architecture using clustered mirror or parity resiliency models. S2D supports hybrid or all-flash models with usable disk capacity calculated using only the capacity tier. SSD or NVMe can be used for caching and SSD or HDD can be used for capacity. Or a mix of all three can be used with NVMe used for cache, SSD and HDD providing capacity. Each disk tier is extensible and can be modified up or down at any time. Dynamic cache bindings ensure that the proper ratio of cache:capacity disks remain consistent for any configuration regardless of whether cache or capacity disks are added or removed. The same is true in the case of drive failure in which case S2D self heals to ensure a proper balance. Similar to VMware VSAN, the storage architecture changes a bit between hybrid or all-flash. In the S2D hybrid model, the cache tier is used for both writes and reads. When the all-flash model is in play, the cache tier is used only for writes. Cluster Shared Volumes are created within the S2D storage pool and shared across all nodes in the cluster. Consumed capacity in the cluster is determined by provisioned volume sizes, not actual storage usage within a given volume.

| Resiliency | Fault Tolerance | Storage Efficiency | Minimum # hosts |

| 2-way mirror | 1 | 50% | 2 |

| 3-way mirror | 2 | 33% | 3 |

| Dual parity | 2 | 50-80% | 4 |

| Mixed | 2 | 33-80% | 4 |

Much more information on S2D resiliency and examples here. As a workload, RDS layers on top of S2D as it would with any other Failover Cluster configuration. Check out my previous series for a deeper dive into the native RDS architecture.

Lab Environment

For this exercise I’m running a 3-node cluster on Dell R630’s with the following specs per node:

- Dual E5-2698v4

- 256GB RAM

- HBA330

- 4 x 400GB SAS SSD

- 5 x 1TB SAS HDD

- Dual Mellanox ConnectX-3 NICs RDMA Over Converged Ethernet (ROCE)

- Windows 2016 Datacenter (1607)

The basic high-level architecture of this deployment:

Part 1: Intro and Architecture (you are here)

Part 2: Prep & Configuration

Part 3: Performance & Troubleshooting

Resources

S2D in Server 2016S2D Overview

Working with volumes in S2D

Cache in S2D

0 Comments