Part 2: Prep & Configuration (you are here)

Part 3: Performance & Troubleshooting

Make sure to check out my series on RDS in Server 2016 for more detailed information on designing and deploying RDSH or RDVH.

Prep

The number 1 rule of clustering with Microsoft is homogeneity. All nodes within a cluster must have identical hardware and patch levels. This is very important. The first step is to check all disks installed to participate in the storage pool, bring all online and initialize. This can be done via PoSH or UI.

Once initialized, confirm that all disks can pool:

Get-PhysicalDisk -CanPool $true | sort model

Install Hyper-V and Failover Clustering on all nodes:

Install-WindowsFeature -name Hyper-V, Failover-Clustering -IncludeManagementTools -ComputerName InsertName -Restart

Run cluster validation, note this command does not exist until the Failover Clustering feature has been installed. Replace portions in red with your custom inputs. Hardware configuration and patch level should be identical between nodes or you will be warned in this report.

Test-Cluster -Node node1, node2, etc -include "Storage Spaces Direct", Inventory, Network, "System Configuration"

The report will be stored in c:\users\<username>\AppData\Local\Temp

Networking & Cluster Configuration

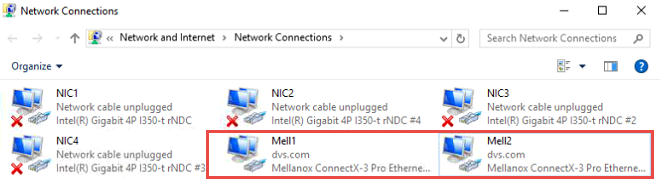

We'll be running the new Switch Embedded Team (SET) vSwitch feature, new for Hyper-V in Server 2016.Repeat the following steps on all nodes in your cluster. First, I recommend renaming the interfaces you plan to use for S2D to something easy and meaningful. I'm running Mellanox cards so called mine Mell1 and Mell2. There should be no NIC teaming applied at this point!

Configure the QoS policy, first enable the datacenter bridging (DCB) feature which will allow us to prioritize certain services the traverse the Ethernet.

Install-WindowsFeature -Name Data-Center-Bridging

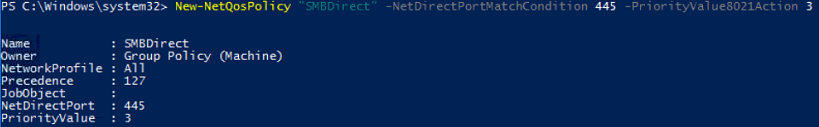

Create a new policy for SMB Direct with a priority value of 3 which marks this service as “critical” per the 802.1p standard:

New-NetQosPolicy "SMBDirect" -NetDirectPortMatchCondition 445 -PriorityValue8021Action 3

Allocate at least 30% of the available bandwidth to SMB for the S2D solution:

New-NetQosTrafficClass "SMBDirect" -Priority 3 -BandwidthPercentage 30 -Algorithm ETS

Create the SET switch using the adapter names you specified previously. Items in red should match your choices or naming standards. Note that this SETSwitch is ultimately a vNIC and can receive an IP address itself:

New-VMSwitch -Name SETSwitch -NetAdapterName "Mell1","Mell2" -EnableEmbeddedTeaming $true

Add host vNICs to the SET switch you just created, these will be used by the management OS. Items in red should match your choices or naming standards. Assign static IPs to these vNICs as required as these interfaces are where RDMA will be enabled.

Add-VMNetworkAdapter –SwitchName SETSwitch –name SMB_1 –managementOS

Add-VMNetworkAdapter –SwitchName SETSwitch –name SMB_2 –managementOS

Once created, the new virtual interface will be visible in the network adapter list by running get-netadapter.

Optional: Configure VLANs for the new vNICs which can be the same or different but IP them uniquely. If you don’t intend to tag VLANs in your cluster or have a flat network with one subnet, skip this step.

Set-VMNetworkAdapterVLAN –VMNetworkAdapterName “SMB_1” –VlanId 00 –Access –ManagementOS

Set-VMNetworkAdapterVLAN –VMNetworkAdapterName “SMB_2” –VlanId 00 –Access –ManagementOS

Verify your VLANs and vNICs are correct. Notice mine are all untagged since this demo is in a flat network.

Get-VMNetworkAdapterVlan –ManagementOS

Restart each vNIC to activate the VLAN assignment.

Restart-NetAdapter “vEthernet (SMB_1)”

Restart-NetAdapter “vEthernet (SMB_2)”

Enable RDMA on each vNIC.

Enable-NetAdapterRDMA “vEthernet (SMB_1)”, “vEthernet (SMB_2)”

Next each vNIC should be tied directly to a preferential physical interface within the SET switch. In this example we have 2 vNICs and 2 physical NICs for a 1:1 mapping. Important to note: Although this operation essentially designates a vNIC assignment to a preferential pNIC, should the assigned pNIC fail, the SET switch will still load balance vNIC traffic across the surviving pNICs. It may not be immediately obvious that this is the resulting and expected behavior.

Set-VMNetworkAdapterTeamMapping -VMNetworkAdapterName "SMB_1" -ManagementOS –PhysicalNetAdapterName “Mell1”

Set-VMNetworkAdapterTeamMapping -VMNetworkAdapterName "SMB_2" -ManagementOS –PhysicalNetAdapterName “Mell2”

To quickly prove this, I have my vNIC “SMB_1” preferentially tied to the pNIC “Mell1”. SMB_1 has the IP address 10.50.88.82

Notice that even though I have manually disabled Mell1, the IP still responds to a ping from another host as SMB_1’s traffic is temporarily traversing Mell2:

Verify the RDMA capabilities of the new vNICs and associated physical interfaces. RDMA Capable should read true.

Get-SMBClientNetworkInterface

Build the cluster with a dedicated IP and slash subnet mask. This command will default to /24 but still might fail unless explicitly specified.

New-Cluster -name "Cluster Name" -Node Node1, Node2, etc -StaticAddress 0.0.0.0/24

Checking the report output stored in c:\windows\cluster\reports\, it flagged not having a suitable disk witness. This will auto-resolve later once the cluster is up.

The cluster will come up with no disks claimed and if there is any prior formatting, they must first be wiped and prepared. In PowerShell ISE, run the following script, enter your cluster name in the red text.

icm (Get-Cluster -Name S2DCluster | Get-ClusterNode) {Once successfully completed, you will see an output with all nodes and all disk types accounted for.

Update-StorageProviderCache

Get-StoragePool | ? IsPrimordial -eq $false | Set-StoragePool -IsReadOnly:$false -ErrorAction SilentlyContinue

Get-StoragePool | ? IsPrimordial -eq $false | Get-VirtualDisk | Remove-VirtualDisk -Confirm:$false -ErrorAction SilentlyContinue

Get-StoragePool | ? IsPrimordial -eq $false | Remove-StoragePool -Confirm:$false -ErrorAction SilentlyContinue

Get-PhysicalDisk | Reset-PhysicalDisk -ErrorAction SilentlyContinue

Get-Disk | ? Number -ne $null | ? IsBoot -ne $true | ? IsSystem -ne $true | ? PartitionStyle -ne RAW | % {

$_ | Set-Disk -isoffline:$false

$_ | Set-Disk -isreadonly:$false

$_ | Clear-Disk -RemoveData -RemoveOEM -Confirm:$false

$_ | Set-Disk -isreadonly:$true

$_ | Set-Disk -isoffline:$true

}

Get-Disk |? Number -ne $null |? IsBoot -ne $true |? IsSystem -ne $true |? PartitionStyle -eq RAW | Group -NoElement -Property FriendlyName

} | Sort -Property PsComputerName,Count

Finally, it’s time to enable S2D. Run the following command and select “yes to all” when prompted. Make sure to use your cluster name in red.

Enable-ClusterStorageSpacesDirect –CimSession S2DClusterStorage Configuration

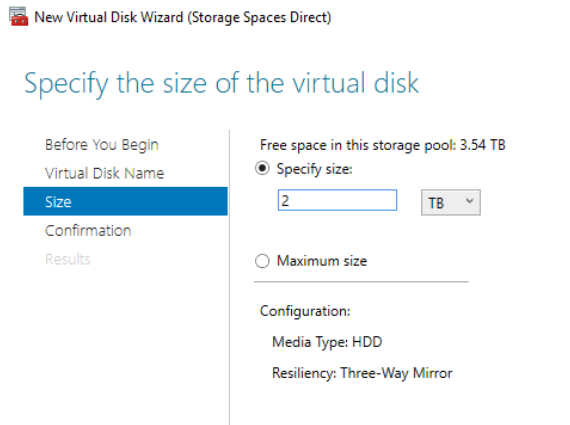

Once S2D is successfully enabled, there will be a new storage pool created and visible within Failover Cluster Manager. The next step is to create volumes within this pool. S2D will make some resiliency choices for you depending on how many nodes are in your cluster. 2 nodes = 2-way mirroring, 3 nodes = 3-way mirroring, if you have 4 or more nodes you can specify mirror or parity. When using a hybrid configuration of 2 disk types (HDD and SSD), the volumes reside within the HDDs as the SSDs simply provide caching for reads and writes. In an all-flash configuration only the writes are cached. Cache drive bindings are automatic and will adjust based on the number of each disk type in place. In my case, I have 4 SSDs + 5 HDDs per host. This will net a 1:1 cache:capacity map for 3 pairs of disks and a 1:2 ratio for the last 3. Microsoft’s recommendation is to make the number of cache drives a multiple of the number of capacity drives, for simple symmetry. If a host experiences a cache drive failure, the cache to capacity mapping will readjust to heal. This is why a minimum of 2 cache drives per host are recommended.Volumes can be created using PowerShell or Failover Cluster Manager by selecting the “new disk” option. This one simple PowerShell command does three things: creates the virtual disk, places a new volume on it and makes it a cluster shared volume. For PowerShell the syntax is as follows:

New-Volume –FriendlyName “Volume Name” –FileSystem CSVFS_ReFS –StoragePoolFriendlyName S2D* –size xTB

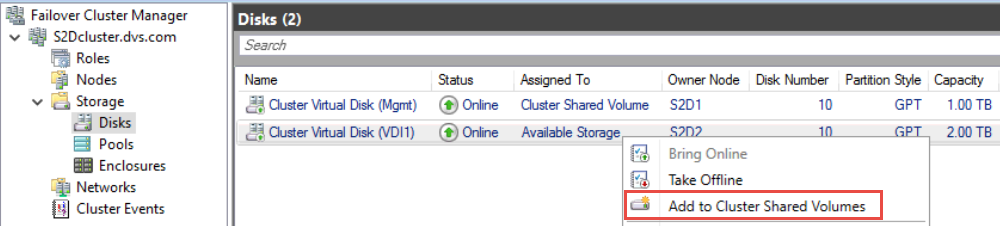

CSVFS_ReFS is recommended but CSVFS_NTFS may also be used. Once created these disks will be visible under the Disks selection within Failover Cluster Manager. Disk creation within the GUI is a much longer process but gives meaningful information along the way, such as showing that these disks are created using the capacity media (HDD) and that the 3-server default 3-way mirror is being used.

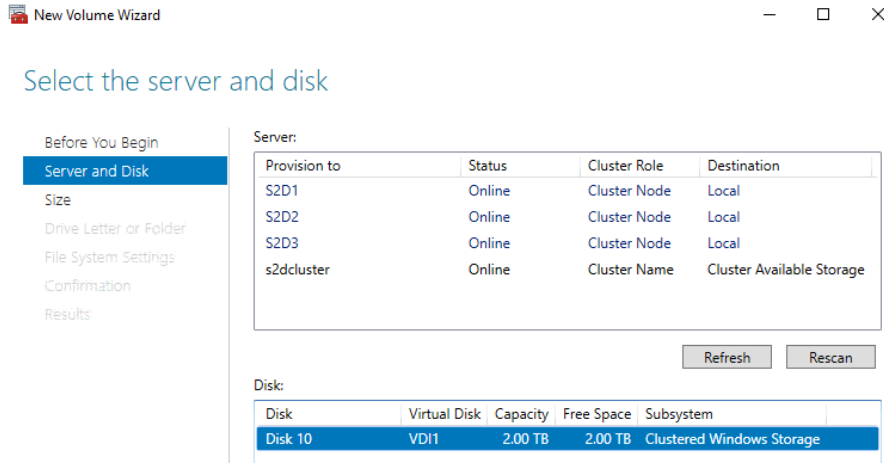

Once the virtual disk is created, next we need to create a volume within using another wizard. Select the vDisk created in the last step:

Storage tiering dictates that the volume size must match the size of the vDisk:

Skip the assignment of a drive letter, assign a label if you desire, confirm and create.

The final step is to add this new disk to a Cluster Shared Volume via Failover Cluster Manager. You’ll notice that the owner node will be automatically assigned:

Repeat this process to create as many volumes as required.

RDS Deployment

Install the RDS roles and features required and build a collection. See this post for more information on RDS installation and configuration. There is nothing terribly special that changes this process for a S2D cluster. As far as RDS is concerned, this is an ordinary Failover Cluster with Cluster Shared Volumes. The fact that RDS is running on S2D and “HCI” is truly inconsequential.As long as the RDVH or RDSH roles are properly installed and enabled within the RDS deployment, the RDCB will be able to deploy and broker connections to them. One of the most important configuration items is ensuring that all servers, virtual and physical, that participate in your RDS deployment, are listed in the Servers tab. RDS is reliant on Server Manager and Server Manager has to know who the players are. This is how you tell it.

![image_thumb[1] image_thumb[1]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEheZkvMYohSUbUR1uhqJegVdw8M9VW5VD-QUfXArrtIgqm9x2V_eqFrIzPgPoaIplP-akM8NNMbMc1XfEK9BzSnx5Uy3leXaL8368PKw8EnewmMofpLkwsGrU5lp6Dmh17i-9qkNrT2CszY/?imgmax=800)

To use the S2D volumes for RDS, point your VM deployments at the proper CSVs within the C:\ClusterStorage paths. When building your collection, make sure to have the target folder already created within the CSV or collection creation will fail. Per the example below, the folder “Pooled1” needed to be manually created prior to collection creation.

RDS is cluster aware in that the VMs it creates can be created as HA within the cluster and the RD Connection Broker (RDCB) remains aware as to which host is running which VMs should they move. In the event of a failure, VMs will be moved to a surviving host by the cluster service and the RDCB will keep track.

Part 2: Prep & Configuration (you are here)

Part 3: Performance & Troubleshooting

Resources

S2D in Server 2016S2D Overview

Working with volumes in S2D

Cache in S2D

0 Comments