Part 2: Prep & Configuration

Part 3: Performance & Troubleshooting (you are here)

Make sure to check out my series on RDS in Server 2016 for more detailed information on designing and deploying RDSH or RDVH.

Performance

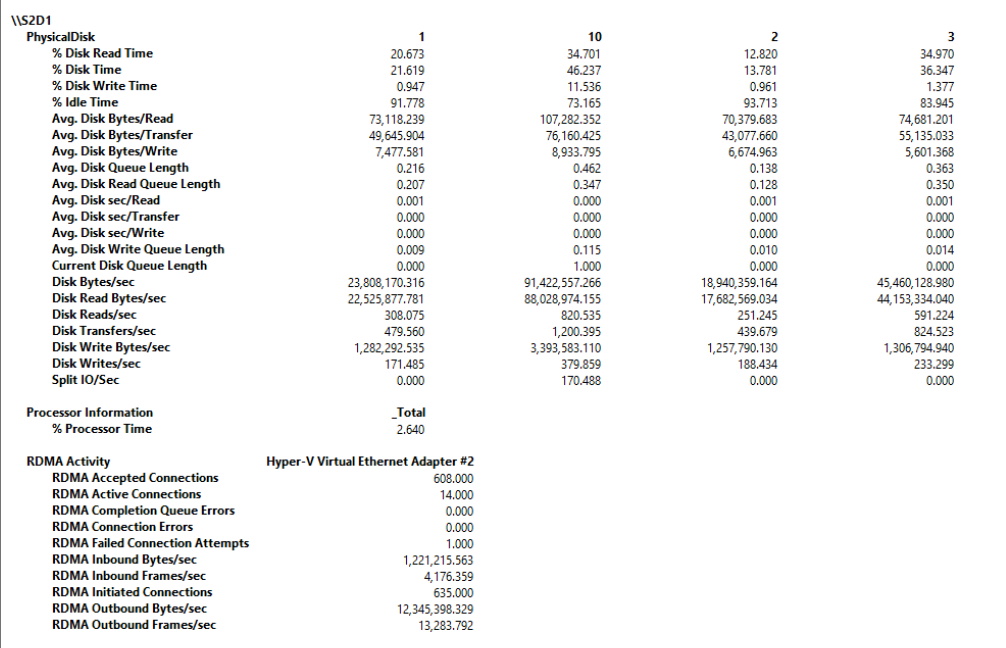

This section will give an idea of disk activity and performance given this specific configuration as it relates to S2D and RDS.Here is the 3-way mirror in action during the collection provisioning activity, 3 data copies, 1 per host, all hosts are active, as expected:

Real-time disk and RDMA stats during collection provisioning:

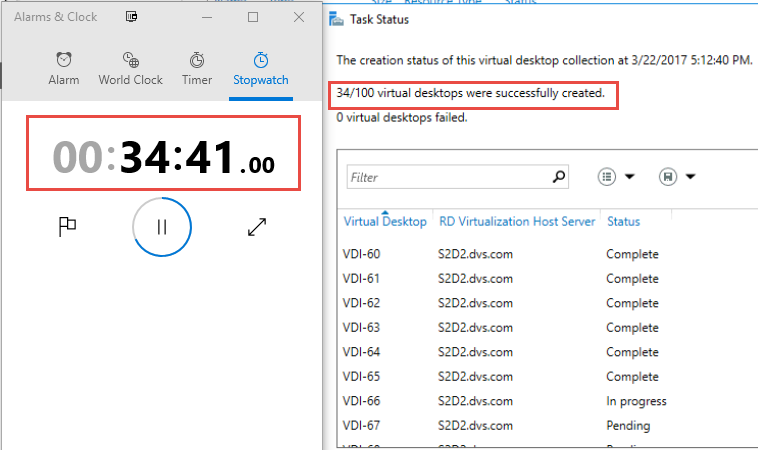

RDS provisioning isn’t the speediest solution in the world by default as it creates VMs one by one. But it’s fairly predictable at 1 VM per minute, so plan accordingly.

Optionally, you can adjust the concurrency level to adjust the number of VMs RDS can create in parallel by using the Set-RDVirtualDesktopConcurrency CMDlet. Server 2012R2 supported a max of 20 concurrent operations but make sure the infrastructure can handle whatever you set here.

Set-RDVirtualDesktopConcurrency –ConnectionBroker “RDCB name” –ConcurrencyFactor 20To illustrate the capacity impact of 3-way mirroring, take my lab configuration which provides my 3-node cluster with 14TB of total capacity, each node contributing 4.6TB. I have 3 volumes fixed provisioned totaling 4TB with just under 2TB remaining. The math here is that of my raw capacity, 30% is usable, as expected. This equation gets better at larger scales, starting with 4 nodes, so keep this in mind when building your deployment.

Here is the disk performance view from one of the Win10 VMs within the pool (VDI-23) running Crystal DiskMark, which runs Diskspd commands behind the scenes. Read IOPS are respectable at an observed max of 25K during the sequential test.

![image_thumb[3] image_thumb[3]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEg5lXCuqKol45tojLAQSPVuG4UpmF8kXswDBcn-L-qIt3QOZGTdXtt4Ipo-pZKqjD34865diUSM5T5zGyKwn1GVVfEt8wn3OOqBWm_xTK_8IS8KFsNgu6q2I_Ka7tEfphMICgDFRXJnkS1L/?imgmax=800)

Write IOPS are also respectable at a max observed of nearly 18K during the Sequential test.

![image_thumb[4] image_thumb[4]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEgqYVh2uzPNvP6EmX34SFqSqMZOVz8OXpSUxtVUUMxSJGaTB34JmInDXE_ENgm9JugaEdBlSiJ3f1gF25mMzRWggQWG2msOWv-_oHbb4RyYHfNU3y-I_9LQb7wERYEgczbmqq-WstXGev00/?imgmax=800)

Writing to the same volume, running the same benchmark but from the host itself, I saw some wildly differing results. Similar bandwidth numbers but poorer showing on most tests for some reason. One run yielded some staggering numbers on the random 4K tests which I was unable to reproduce reliably.

![image_thumb[5] image_thumb[5]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEgOxslB1DmtpEyZbOtg_OeYxzOe4jAZBTALFLw2eHIvsnzAQvg-iWrHFYl9h_c19aGeU0NuVrSAeEFO4CPli7Jydn3gdkKkhwnn60VJ-8RLN_3LaojcdpHQ1lfCCaG_2-MCPtysxU8uF6WM/?imgmax=800)

Diskspd.exe -b4k -d60 -L -o2 -t4 -r -w70 -c500M c:\ClusterStorage\Volume1\RDSH\io.dat

![image_thumb[6] image_thumb[6]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEjjq6k5m8AraaJwjB2w_NvniSxGHi8yJ_f-nw68-Sg_s8EdGgc_6ehGyLUJyMkPbXJJjDEKS5j3wLBPmhb2nioY6eirXd2rX_YgyiiJKx6X16LX_7zc3-H_07F8kMN_FTTVo1cRhafkfUAU/?imgmax=800)

Just for comparison, here is another run with cache disabled. As expected, not so impressive.

![image_thumb[8] image_thumb[8]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEg1bBA1sekzSbEufA736eVsHBFWprUkBPv_uvSxceMkIauA5VhrwW4zNnHOkqzTel9px28817KipB0tyeck8H2CsejlxM0NdfuLI_YlUUwXSuHlPW_KqCET9KH4y7VLoit8wpYemiYhlZcM/?imgmax=800)

Troubleshooting

Here are a few things to watch out for along the way.When deploying your desktop collection, if you get an error about a node not having a virtual switch configured:

One possible cause is that the default SETSwitch was not configured for RDMA on that particular host. Confirm and then enable per the steps above using Enable-NetAdapterRDMA.

On the topic of VM creation within the cluster, here is something kooky to watch out for. If working on one of your cluster nodes locally and you try to add a new VM to be hosted on a different node, you may have trouble. When you get to the OS install portion where the UI offers to attach an ISO to boot from. If you point to an ISO sitting on a network share, you may get the following error: Failing to add the DVD device due to lack of permissions to open attachment.

You will also be denied if you try to access any of the local paths such as desktop or downloads.

Watch this space, more to come…

Part 1: Intro and Architecture

Part 2: Prep & Configuration

Part 3: Performance & Troubleshooting (you are here)

Resources

S2D in Server 2016S2D Overview

Working with volumes in S2D

Cache in S2D

0 Comments